autotorch.scheduler¶

FIFOScheduler¶

-

class

autotorch.scheduler.FIFOScheduler(train_fn, args=None, resource=None, searcher=None, search_options=None, checkpoint='./exp/checkpoint.ag', resume=False, num_trials=None, time_out=None, max_reward=1.0, time_attr='epoch', reward_attr='accuracy', visualizer='none', dist_ip_addrs=None)[source]¶ Simple scheduler that just runs trials in submission order.

- Parameters

train_fn (callable) – A task launch function for training. Note: please add the @autotorch_method decorater to the original function.

args (object (optional)) – Default arguments for launching train_fn.

resource (dict) – Computation resources. For example, {‘num_cpus’:2, ‘num_gpus’:1}

searcher (str or object) – Autotorch searcher. For example, autotorch.searcher.self.argsRandomSampling

time_attr (str) – A training result attr to use for comparing time. Note that you can pass in something non-temporal such as training_epoch as a measure of progress, the only requirement is that the attribute should increase monotonically.

reward_attr (str) – The training result objective value attribute. As with time_attr, this may refer to any objective value. Stopping procedures will use this attribute.

dist_ip_addrs (list of str) – IP addresses of remote machines.

Examples

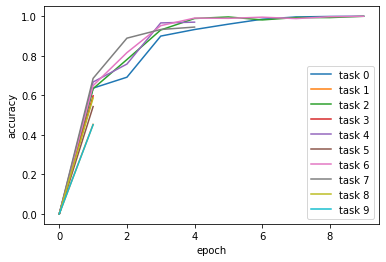

>>> import numpy as np >>> import autotorch as at >>> @at.args( ... lr=at.Real(1e-3, 1e-2, log=True), ... wd=at.Real(1e-3, 1e-2)) >>> def train_fn(args, reporter): ... print('lr: {}, wd: {}'.format(args.lr, args.wd)) ... for e in range(10): ... dummy_accuracy = 1 - np.power(1.8, -np.random.uniform(e, 2*e)) ... reporter(epoch=e, accuracy=dummy_accuracy, lr=args.lr, wd=args.wd) >>> scheduler = at.scheduler.FIFOScheduler(train_fn, ... resource={'num_cpus': 2, 'num_gpus': 0}, ... num_trials=20, ... reward_attr='accuracy', ... time_attr='epoch') >>> scheduler.run() >>> scheduler.join_jobs() >>> scheduler.get_training_curves(plot=True)

-

add_job(task, **kwargs)[source]¶ Adding a training task to the scheduler.

- Parameters

task (

autotorch.scheduler.Task) – a new training task

- Relevant entries in kwargs:

bracket: HB bracket to be used. Has been sampled in _promote_config

new_config: If True, task starts new config eval, otherwise it promotes a config (only if type == ‘promotion’)

- Only if new_config == False:

config_key: Internal key for config

resume_from: config promoted from this milestone

milestone: config promoted to this milestone (next from resume_from)

-

get_training_curves(filename=None, plot=False, use_legend=True)[source]¶ Get Training Curves

- Parameters

filename (str) –

plot (bool) –

use_legend (bool) –

Examples

>>> scheduler.run() >>> scheduler.join_jobs() >>> scheduler.get_training_curves(plot=True)

-

load_state_dict(state_dict)[source]¶ Load from the saved state dict.

Examples

>>> scheduler.load_state_dict(at.load('checkpoint.ag'))

-

run_with_config(config)[source]¶ Run with config for final fit. It launches a single training trial under any fixed values of the hyperparameters. For example, after HPO has identified the best hyperparameter values based on a hold-out dataset, one can use this function to retrain a model with the same hyperparameters on all the available labeled data (including the hold out set). It can also returns other objects or states.

HyperbandScheduler¶

-

class

autotorch.scheduler.HyperbandScheduler(train_fn, args=None, resource=None, searcher=None, search_options=None, checkpoint='./exp/checkpoint.ag', resume=False, num_trials=None, time_out=None, max_reward=1.0, time_attr='epoch', reward_attr='accuracy', max_t=100, grace_period=10, reduction_factor=4, brackets=1, visualizer='none', type='stopping', dist_ip_addrs=None, keep_size_ratios=False, maxt_pending=False)[source]¶ Implements different variants of asynchronous Hyperband

See ‘type’ for the different variants. One implementation detail is when using multiple brackets, task allocation to bracket is done randomly based on a softmax probability.

- Parameters

train_fn (callable) – A task launch function for training.

args (object, optional) – Default arguments for launching train_fn.

resource (dict) – Computation resources. For example, {‘num_cpus’:2, ‘num_gpus’:1}

searcher (object, optional) – Autotorch searcher. For example,

autotorch.searcher.RandomSearchertime_attr (str) – A training result attr to use for comparing time. Note that you can pass in something non-temporal such as training_epoch as a measure of progress, the only requirement is that the attribute should increase monotonically.

reward_attr (str) – The training result objective value attribute. As with time_attr, this may refer to any objective value. Stopping procedures will use this attribute.

max_t (float) – max time units per task. Trials will be stopped after max_t time units (determined by time_attr) have passed.

grace_period (float) – Only stop tasks at least this old in time. Also: min_t. The units are the same as the attribute named by time_attr.

reduction_factor (float) – Used to set halving rate and amount. This is simply a unit-less scalar.

brackets (int) – Number of brackets. Each bracket has a different grace period, all share max_t and reduction_factor. If brackets == 1, we just run successive halving, for brackets > 1, we run Hyperband.

type (str) –

- Type of Hyperband scheduler:

- stopping:

See

HyperbandStopping_Manager. Tasks and config evals are tightly coupled. A task is stopped at a milestone if worse than most others, otherwise it continues. As implemented in Ray/Tune: https://ray.readthedocs.io/en/latest/tune-schedulers.html#asynchronous-hyperband- promotion:

See

HyperbandPromotion_Manager. A config eval may be associated with multiple tasks over its lifetime. It is never terminated, but may be paused. Whenever a task becomes available, it may promote a config to the next milestone, if better than most others. If no config can be promoted, a new one is chosen. This variant may benefit from pause&resume, which is not directly supported here. As proposed in this paper (termed ASHA): https://arxiv.org/abs/1810.05934

keep_size_ratios (bool) – Implemented for type ‘promotion’ only. If True, promotions are done only if the (current estimate of the) size ratio between rung and next rung are 1 / reduction_factor or better. This avoids higher rungs to get more populated than they would be in synchronous Hyperband. A drawback is that promotions to higher rungs take longer.

maxt_pending (bool) – Relevant only if a model-based searcher is used. If True, register pending config at level max_t whenever a new evaluation is started. This has a direct effect on the acquisition function (for model-based variant), which operates at level max_t. On the other hand, it decreases the variance of the latent process there. NOTE: This could also be removed…

dist_ip_addrs (list of str) – IP addresses of remote machines.

Examples

>>> import numpy as np >>> import autotorch as at >>> >>> @at.args( ... lr=at.Real(1e-3, 1e-2, log=True), ... wd=at.Real(1e-3, 1e-2)) >>> def train_fn(args, reporter): ... print('lr: {}, wd: {}'.format(args.lr, args.wd)) ... for e in range(10): ... dummy_accuracy = 1 - np.power(1.8, -np.random.uniform(e, 2*e)) ... reporter(epoch=e, accuracy=dummy_accuracy, lr=args.lr, wd=args.wd) >>> scheduler = at.scheduler.HyperbandScheduler(train_fn, ... resource={'num_cpus': 2, 'num_gpus': 0}, ... num_trials=20, ... reward_attr='accuracy', ... time_attr='epoch', ... grace_period=1) >>> scheduler.run() >>> scheduler.join_jobs() >>> scheduler.get_training_curves(plot=True)

-

add_job(task, **kwargs)[source]¶ Adding a training task to the scheduler.

- Parameters

task (

autotorch.scheduler.Task) – a new training task

Relevant entries in kwargs:

bracket: HB bracket to be used. Has been sampled in _promote_config

new_config: If True, task starts new config eval, otherwise it promotes a config (only if type == ‘promotion’)

Only if new_config == False:

config_key: Internal key for config

resume_from: config promoted from this milestone

milestone: config promoted to this milestone (next from resume_from)